| Home | Research | Publications | Teaching |

|

[Subjects] [Talks] [Workshop organization]

Control of generative modelsWith Perla Doubinsky (PhD student financed by the AHEAD ANR programme with the CEA, Hervé Le Borgne (CEA) and Nicolas Audebert (CEDRIC). Associated publications are [DACB22], [DAC23] and [DAC24]. |

|

Apprentissage profond pour la compréhension de scènes visuellesWith Wafa Aissa (PhD student financed by a CIFRE with XXii) and Marin Ferecatu (CEDRIC). Associated publications are [AFC23] and [AFC23b]. |

|

Zero-Shot Learning, Generalized Zero-Shot LearningThis work with Yannick Le Cacheux and Hervé Le Borgne (CEA) focused on Zero-Shot Learning (ZSL) that aims at recognizing classes having no training sample, with the help however of available semantic class prototypes. We have shown in [CBC19] that a simple approach combining calibration and well balanced regularization allows to improve the results of many existing methods for Generalized ZSL (where candidate classes for recognition are not limited to the unseen classes). Moreover, this approach also allows a simple linear model to provide results that are on parr with or better than those of the state of the art. In [CBC19b] we identify several implicit assumptions that limit the performance of SoA methods on real use cases, particularly with fine-grained datasets comprising a large number of classes, and put forward corresponding novel contributions to address them: taking into account both inter-class and intra-class relations, being more permissive with confusions between similar classes, and penalizing visual samples which are atypical to their class. The approach is tested on four datasets, including the large-scale ImageNet, and exhibits performances significantly above recent methods, even generative methods based on more restrictive hypotheses. Eventually, in [CBC20a] we study the construction of semantic prototypes from descriptive sentences. A state of the art is presented in [CBC21]. |

|

Learning from streaming dataRecent research with Andrey Besedin, Marin Ferecatu (CEDRIC) and Pierre Blanchart (CEA) addresses the difficulties one faces when attempting to learn from streaming data. First, in a stream the data distribution is not stationary, new classes can appear, existing classes can dissapear for some time then appear again, etc. Second, it is practically infeasible to store the all the historical labeled data and regularly train the models on all this data. We put forward solutions (see [BBC17, BBC18, BBCF20]) mainly based on generative adversarial networks (GAN) and autoencoders to face these problems. |

|

|

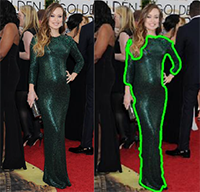

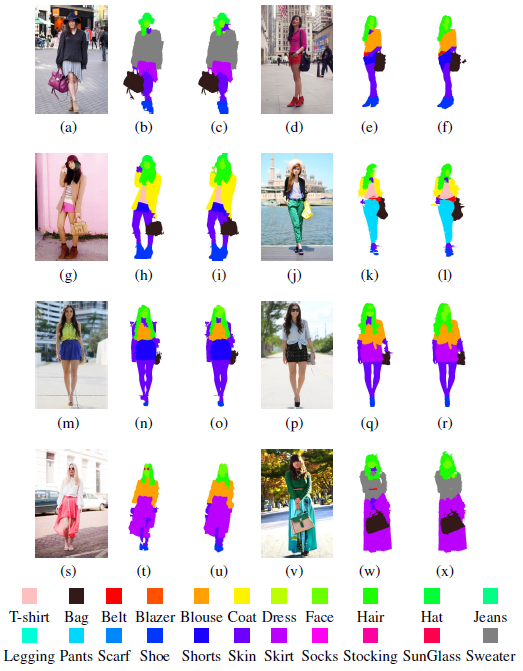

Fashion items extraction from web imagesWork with Lixuan Yang (Check Lab) and Marin Ferecatu, aiming to extract various fashion items (especially clothing) from ordinary web images, in order to retrieve similar items from databases. It is important to reliably extract the items in order to be able to describe their shape and obtain relevant retrieval results. Our first results, on long dresses, were published in [YRFC16]. Novel methods, applicable to much broader categories of clothing, were put forward in [YRFC16b, YRFC17]. Lixuan defended her PhD in July 2017. |

|

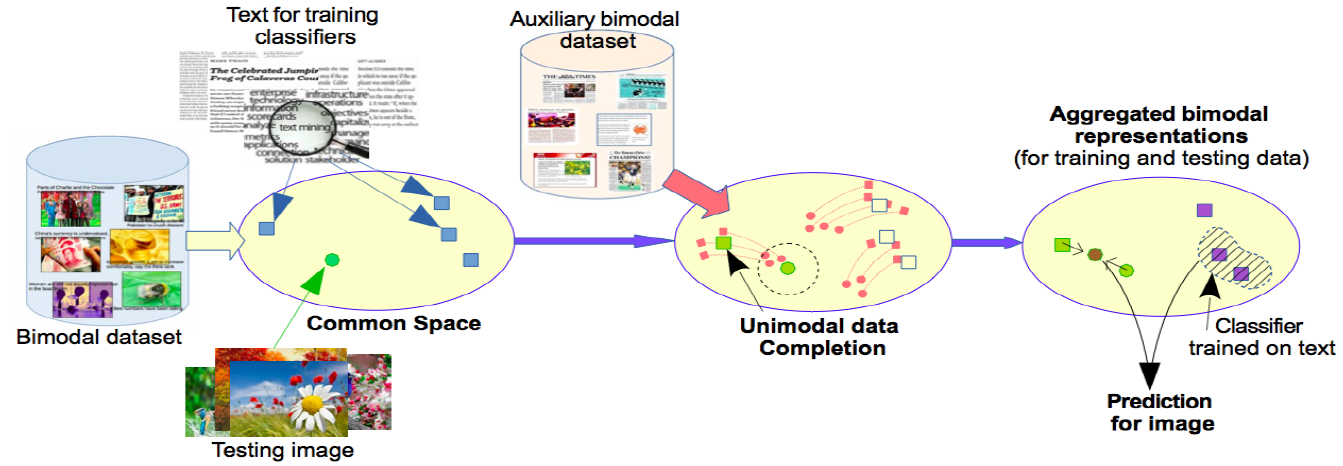

Cross-modal retrievalThis work with Thi Quynh Nhi Tran and Hervé Le Borgne from CEA focussed on cross-modal content-based retrieval: return images from text queries (text illustration), return texts from image queries. The first published results concern a combination of search in a latent space (obtained by kernel canonical correlation analysis) and rare (so, poorly represented on this latent space) but very relevant information, corresponding e.g. to named entities, see [TBC15]. We put forward in [TBC16] a method for "completing" a unimodal representation in order to obtain a bimodal representation, with a significant impact on the performance of cross-modal retrieval. In [TBC16b] we focussed, with very good results, on a novel problem we called "cross-modal classification", consisting in training classifiers on one modality (e.g. text) and applying them on another modality (e.g. image). Nhi defended her PhD in May 2017 and is now with Invivoo. |

|

Action detection and localization in large video databasesWithin the Mex-Culture ANR "Blanc International" project we worked with Andrei Stoian, Marin Ferecatu and Jenny Benois-Pineau (from LABRI) on the localization and search (including interactive search) of actions in video, see [SFBC15b] and [SFBC15a]. We proposed a two-stage detection system. At the first stage, a major part of the video content is filtered out using compact video representations. A second stage ranks the results using 1-class SVMs with a global alignment kernel [SFBC15b]; a novel feature selection method adapted to sequences allows this kernel to be effective. We also introduced the notion of "query by detector" and put forward an approximate method that returns the occurrences of an action in the database without having to exhaustively scan the video content [SFBC15a]. An action detection and evaluation database, called MEXaction2, was made publicly available on the web site of the project. |

|

Clustering (2005-2010)The first goal of our work on active semi-supervised clusiering with Nizar Grira (PhD student at INRIA) and Nozha Boujemaa (Research Director at INRIA) in the IMEDIA team of INRIA Rocquencourt was to make possible the inclusion of the must link and cannot link pairwise constraints provided by an (expert) user during a clustering process. We proposed an extension of the Competitive Agglomeration method, based on a cost function integrating a term specific to the pairwise constraints [GCB06]. In order to optimize the exploitation of the user-provided constraints, we developed a new, active version of the algorithm [GCB08], applied to the Arabidopsis data of the BIOTIM project (supported by the French National Research Agency). This work partly motivated the launch of the Active and Semi-Supervised Learning e-team in the MUSCLE European FP6 Network of Excellence. In many cases, a dataset can be clustered following several criteria that complement each other: group membership following one criterion provides little or no information regarding group membership following the other criterion. When these criteria are not known a priori, they have to be determined from the data. In [PC10] we put forward a new method, inspired by tree-component analysis, for jointly finding the complementary criteria and the clustering corresponding to each criterion. |

|

Interactive image retrieval with relevance feedback (2003-2010)The work with Marin Ferecatu (PhD student at INRIA) and Nozha Boujemaa (Research Director at INRIA) within the IMEDIA team of INRIA Rocquencourt concerned the optimisation of active learning [FCB04a], [FCB04b] and the hybrid search combining visual and conceptual image descriptions [FBC08a], [FBC08b]. In collaboration with Jean-Philippe Tarel (researcher at LCPC) we explored the range of user behaviors during a retrieval session ans studied the impact of this behavior on the search mechanisms [CTF08]. Subsequent work, started at INRIA and continued in the Vertigo team with Daniel Estevez (engineer at CNAM), Vincent Oria (Professor at New Jersey Institute of Technology) and Jean-Philippe Tarel, mainly focussed on the scalability of relevance feedback. We put forward an extension of the M-tree built in the feature space associated to a kernel and dealing with hyperplane queries, which allowed us to perform interactive searches with relevance feedback in databases of about 110000 images [CEOT09]. On-going work with Wajih Ouertani (doctorant INRA) and Nozha Boujemaa, within the Pl@ntNet project, concern interactive search with local image features; the first results are shown in [OCB10], [OBC10]. |

|

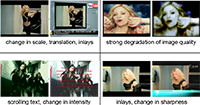

Content-based video copy detection for video stream monitoring and video mining (2005-2010)The main applications of content-based video copy detection concern rights enforcement and video mining. The scalability of copy detection is one of the main challenges for dealing with real-world problems. Our research in this domain with Sébastien Poullot (PhD student Vertigo-INA) and Olivier Buisson (researcher at INA), in relation with the Sigmund project (supported by the French RIAM network), addresses both stream monitoring and video mining. For the first application, we put forward an indexing solution based on models of the data and of the transformations encountered for video copies. This allows us to monitor, with 1 ordinary computer, in deferred real time, one video stream against a database of 280000 hours of original video content (part of the results were published in [PBC07], PCB09]). While this method could also be used for video mining by copy detection, we developed an alternative approach that makes mining even more scalable. We suggested more compact representations (named Glocal) for video keyframes, together with an indexing method combining ideas from locality-sensitive hashing and inverted lists in order to speed up the similarity self-join operation on the signature database. This approach allows us to mine a database of 10000 hours of video in 3 days [PCB08] and the about 70 hours of video corresponding to the answers provided by a Web2.0 site to a keyword-based query in about 45 seconds [PCB08c], again with an ordinary computer. An efficient parallel implementation of the proposed method is also possible [PCB08b]. These results were significantly improved (both for precision/recall and for retrieval speed), in collaboration with Shin'ichi Satoh, from the National Institute of Informatics (Tokyo), by using local groups of local features when indexing the Glocal signatures, together with simple geometric configuration information [PCSC09]. Some new ideas regarding video copy detection (local temporal summaries, multi-level LSH) were first applied to the detection of multi-variant audio tracks (or cover songs), in collaboration with Vincent Oria and Yi Yu from the New Jersey Institute of Technology [YCOC09], [YCOD10]. |

|

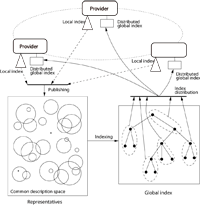

Search by multimedia content in distributed heterogenous environnement (2007-2009)Our work on this issue, with François Boisson (PhD student in Vertigo) and Dan Vodislav (Pr ofessor at the University of Cergy-Pontoise), is part of the DISCO (Distributed Indexing and Search by COntent) project financed by the French ANR (National Research Agency). We believe that the search for multimedia content by the large public will face at least two major evolutions in the near future: the inclusion of content-based search criteria and a significant growth in the volume of content owned by each provider. In this context, most of the providers will need a local index for efficiently searching by content in their own repository. A significant diversity is expected for the indexing solutions adopted by the various providers. Also, each provider want to keep full control over its local index ans many consider it as an important asset; these local indexes will then not be disseminated. In order to be make uniform and efficient search possible in this context, we suggest an approach based on the publication by every provider, in a consensual format, of "representatives" associated to parts of the local index where the provider wants to make its content "findable" by the users. The representatives published by all the providers serve to build a global index that is then distributed over the network nd used for answering similarity queries (see [BCV08], [BCV08b]). |

Previous research topics: document layout analysis (2000-2002), neural networks and time series (1996-2003), structured representations in neural networks (1991-1995), systolic networks (1989-1991).

20/11/2014 : Invited talk at the workshop "Similarity, K-NN, Dimensionality, Multimedia Databases" (Nov. 20-21, 2014, Rennes, France).

1/11/2014 : Invited talk "Multimedia information retrieval: beyond ranking" at the "International Workshop on Computational Intelligence for Multimedia Understanding" (IWCIM, Paris, 2014).

July 2nd, 2010: Scalability of content-based multimedia retrieval and mining, invited talk (in French) during the Annual meeting of the GDR Information - Interaction - Intelligence (I3)

April 1st, 2009: Scalable Content-Based Video Copy Detection, invited talk at the New Jersey Institute of Technology

January 12th, 2012: TRECVID 2011 feedback and industry challenges

November 3rd, 2010: Scalability of content-based multimedia retrieval and mining (about 35 participants; report (in French) with pointers to the presentations)

June 9th, 2009: Scalability of content-based multimedia information retrieval (about 40 participants; report (in French) with pointers to the presentations)

| Vertigo | CEDRIC | CNAM | © Michel Crucianu | About this site | October 9, 2024 |